SwiftEye Medical:

AI COVID-19 Detection

🔹 Outcome: Built a YOLOv5 model to detect COVID-19 and pneumonia from X-rays, achieving best-in-class classification accuracy.

🔹 Role & Skills: Conducted research, supported data pipeline design and ML model training, built prototype dashboard, mobile UX, and pitch deck.

🔹 Key Strengths Shown: ML prototyping, healthcare UX design, applied computer vision, impact storytelling.

Lessons Learned

Technical vs. Clinical Standards Gap: 57.76% precision excited us until radiologists said clinical deployment needs 90%+ accuracy. Technical feasibility ≠ product viability.

Dataset Limited Scope: Started wanting multi-condition detection but dataset only supported COVID classification. Learned to align technical scope with available resources upfront.

Regulatory Blindspot: Ignored FDA/HIPAA requirements in roadmap. 6-month timeline became 18+ months. Front-load compliance research in healthcare products.

1. Identifying the Problem: Slow & Inconsistent Diagnosis

Approach

To validate feasibility and user needs, we conducted data analysis, ML prototyping, and UX research to design an effective AI-driven solution.

Problem Overview

COVID-19 spreads rapidly and can cause severe respiratory complications. The current gold standard for diagnosis, RT-PCR testing, has major limitations:

Slow results: Takes 24+ hours to return findings.

Inaccuracy: 30-70% sensitivity, leading to false negatives and delayed treatment.

Limited access: Requires laboratories and trained personnel, making it difficult to scale testing in overwhelmed healthcare systems.

User Pain Points

Delays in treatment & isolation

False negatives increase spread

Limited access to rapid testing

Competitive Gaps

RT-PCR is slow and resource-heavy.

Existing imaging tools lack AI automation for detection.

Opportunity

AI-powered imaging can provide faster, scalable, and automated COVID-19 detection, improving accuracy and accessibility.

2. Developing the AI Model: Feasibility & Implementation

Technology Feasibility

AI in medical imaging: Research shows machine learning can analyze chest X-rays & CT scans for COVID-19 detection.

Comparable performance: CT scans have 98% sensitivity, X-rays 69%, making them viable diagnostic tools.

Existing gaps: Current systems lack automated classification & severity tracking.

Market Demand

COVID-19 diagnostics remains a priority: Rapid, accurate detection is essential for outbreak management.

AI adoption in healthcare is growing: Increasing investment in automation for diagnostics & triage.

Clinician need for AI support: Faster imaging-based tools can assist with triage while waiting for RT-PCR results.

Strategic Fit

Bridges a diagnostic gap: Faster and more accessible alternative to slow lab-based testing.

Scalable beyond COVID-19: Potential for pneumonia detection & broader AI-driven radiology applications.

Opportunity

An AI-powered COVID-19 diagnostic tool can enhance screening speed, reduce reliance on RT-PCR, and improve decision-making for healthcare professionals.

3. Prototyping the AI-Powered COVID-19 Detection System

Building & Evaluating Machine Learning Models

To develop an AI-powered COVID-19 detection system, we focused on:

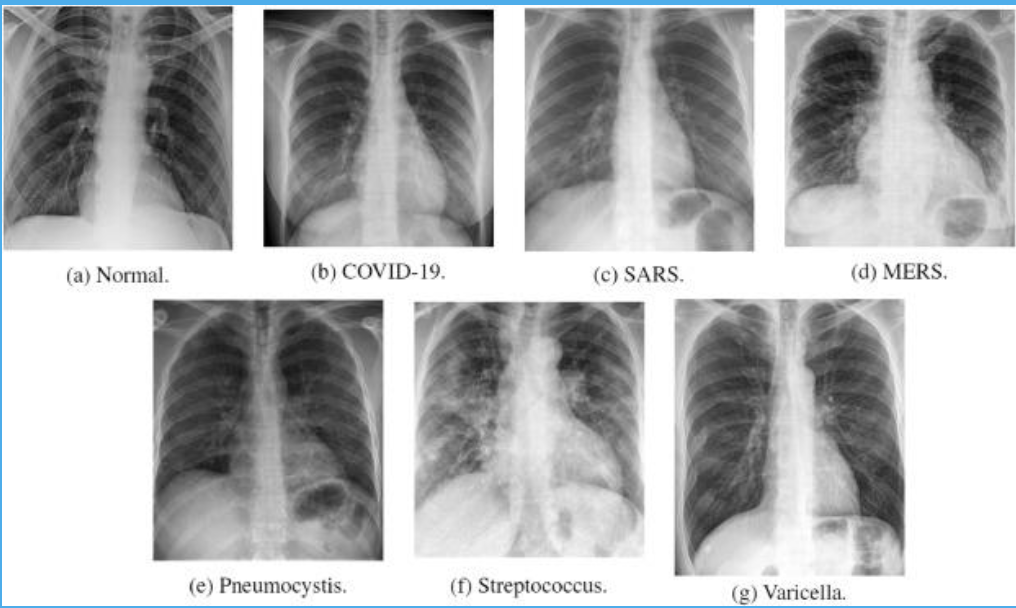

Preprocessing chest X-ray datasets for training deep learning models.

Prototyping & optimizing object detection models (YOLO v5, Faster R-CNN).

Designing an analytics dashboard & mobile app prototype for usability testing.

Machine Learning Development & Model Performance

Data Collection & Preprocessing

Sourced chest X-ray datasets from medical repositories.

Resized images (512x512, 256x256 pixels) to optimize training.

Applied data augmentation to improve generalization.

Model Training & Evaluation

Two deep learning architectures were tested:

YOLO v5: Optimized for real-time detection.

Faster R-CNN: Enhanced lesion segmentation.

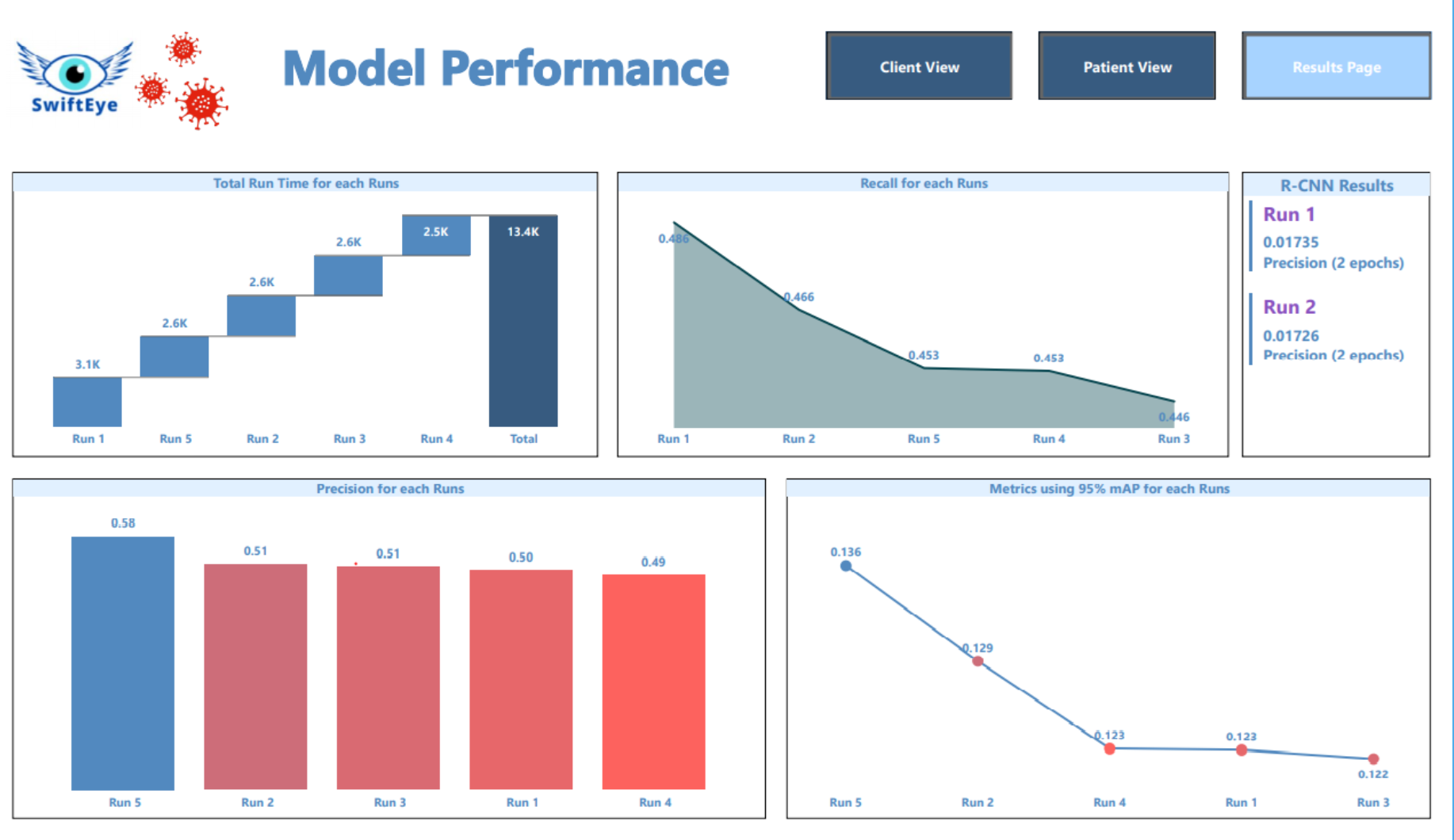

Model Performance & Insights

YOLO v5 Model 5 (512x512 resolution) was the optimal model, achieving:

Precision: 0.5776 (strong performance in identifying COVID-19 positive cases).

Mean Average Precision (mAP@0.5): 0.4543, indicating the model successfully identified affected lung regions in over half of the images.

Hyperparameter Optimization:

Trained models for 50 epochs (Run 6 extended to 75 epochs for minimizing underfitting).

Adjusted learning rate, momentum, and weight decay to improve convergence & reduce overfitting.

Key Takeaway:

The optimal YOLO v5 model can assist radiologists as a decision-support tool, identifying lung abnormalities efficiently within a human-in-the-loop workflow.

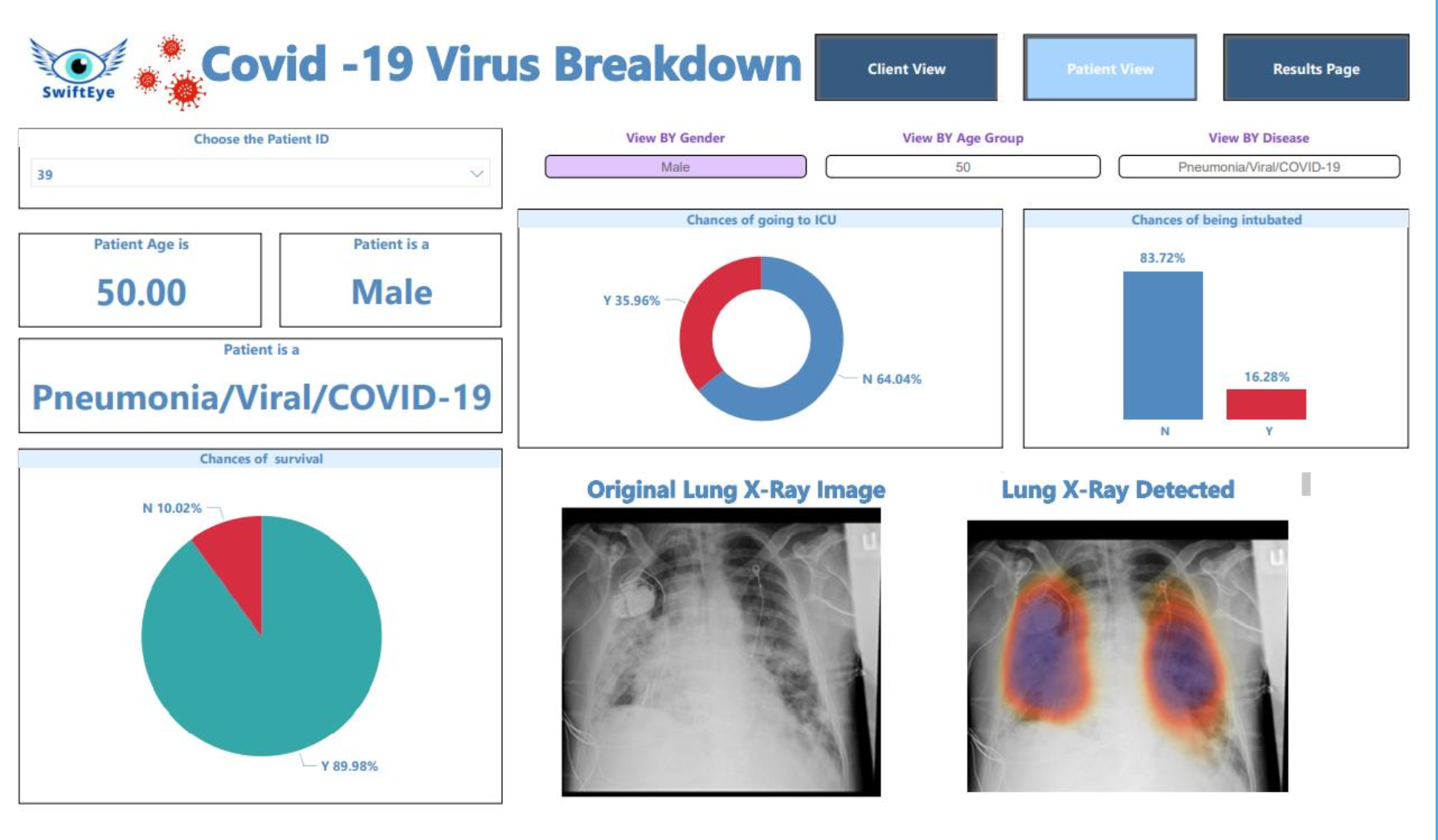

Application: Conceptualizing a Doctor-Facing Analytics Tool

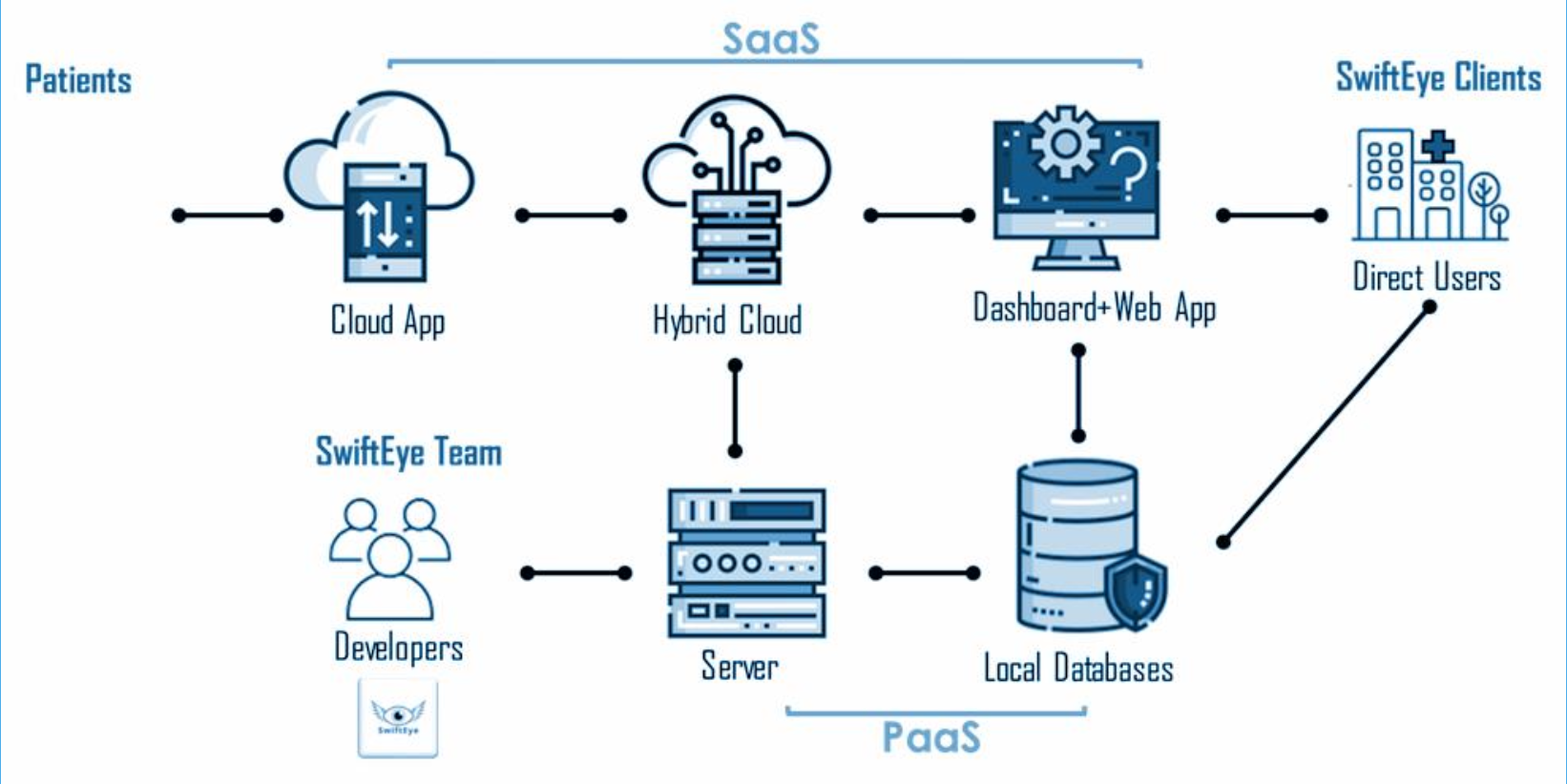

Beyond model development, we explored how AI could be integrated into clinical workflows through an interactive dashboard & mobile app prototype:

Real-time X-ray analysis & severity scoring to assist radiologists.

Trend visualization & predictive insights for patient monitoring.

User-friendly mobile interface for rapid screening in remote/hospital settings.

This phase validated AI’s feasibility for COVID-19 detection, laying the groundwork for further development, regulatory approval, and clinical integration.

4. Roadmap & Implementation Strategy

To structure development and deployment, I aligned the roadmap with a phased approach, prioritizing technical feasibility, clinical validation, and adoption.

Phase 1: Data Collection & Model Prototyping (Completed)

Objective: Establish technical feasibility through dataset preparation and AI model development.

Collected & Preprocessed X-ray Data: Standardized imaging formats for training.

Trained YOLO v5 & Faster R-CNN Models: Iterated on hyperparameters for optimal COVID-19 detection.

Validated Model Performance: Identified YOLO v5 Run 5 as the best-performing model for clinical applications.

Phase 2: Model Testing & UX Design (Completed)

Objective: Validate model accuracy and conceptualize user-facing solutions.

Tested Models on Various Resolutions: Assessed trade-offs in accuracy & processing speed.

Developed Model Interpretability Tools: Enabled transparency in AI-driven decisions.

Designed AI-Powered Dashboard & Mobile Prototype: Conceptualized doctor-facing UI for real-time analysis.

Phase 3: Clinical Validation & Feedback Iteration (Future Work)

Objective: Ensure real-world clinical adoption through testing and compliance.

Pilot Testing with Radiologists: Evaluate AI-assisted workflows in hospitals.

Refine Model with Clinician Feedback: Improve accuracy & usability based on real-world testing.

Address Regulatory & Compliance Needs: Align with FDA & HIPAA standards for AI-driven diagnostics.

Phase 4: Deployment & Scalability (Future Work)

Objective: Expand adoption and integrate AI into broader radiology applications.

Expand to Additional Imaging Modalities: Adapt AI for CT scans & lung ultrasounds.

Develop Full-Scale SaaS Platform: Integrate AI-powered diagnostics into hospital IT systems.

Enable Continuous Model Learning: Implement real-world data feedback loops for continuous improvement.

Key Takeaways: How This Showcases My Product Thinking

AI-Driven Feasibility Testing

Validated model performance across multiple architectures & hyperparameters.

Human-Centered Design

Designed UX prototypes tailored for radiologists & frontline healthcare workers.

Scalable Roadmap

Defined phased implementation strategy for AI integration in real-world clinical settings.